SchemaMapper IV: I just dropped in, to see what condition my Attr Value Field was in.

Hi FME’ers,

Here’s the 4th in a series of posts on the SchemaMapper transformer. If you haven’t read any of those yet then I seriously suggest you start out on post #1.

Reminder

Remember that Schema is the FME term for what you might call data model. The source schema is “what we have” and the destination schema is “what we want to get”. The act of connecting the source schema to the destination, to fulfill the “what we want” aspect, is called Schema Mapping.

Usually Schema Mapping is done through connections in a workspace; but can alternatively be carried out by the SchemaMapper transformer.

Overview

This post will cover conditional Feature Type mapping, which is when the connection between what we have and what we want depends on the value of an attribute.

If you remember post #2 you might recall that the SchemaMapper “Index Mapping” dialog takes care of Feature Type mapping. This dialog has four fields in it, which in effect is a where clause (FME documentation prefers the term “if”) all by itself. For example:

if fme_feature_type = xxxx, then set new_fme_feature_type to zzzz

So by adding items to the “Filter Fields” (Where clause) dialog really we are adding an “AND” to this existing condition; for example:

if fme_feature_type = xxxx AND attribute = yyyy, then set new_fme_feature_type to zzzz

So let’s give this a try.

Scenario

This example is slightly more complicated because I want to work with multiple feature types to demonstrate the full potential.

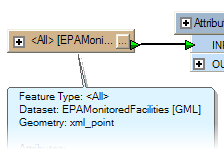

In this scenario I have a dataset of facilities registered with various environmental safety programs. To work with the data I need it to be structured as a separate Feature Type for each program.

Unfortunately the provider of the data has seen fit to provide it divided by data capture method.

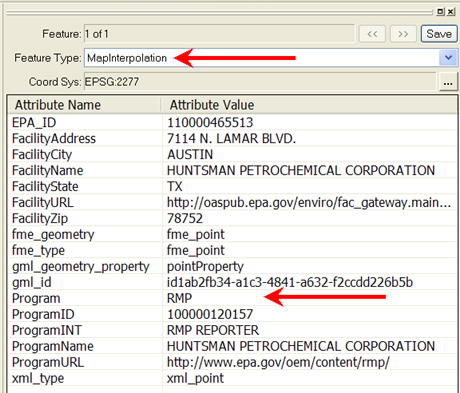

For example (below) here I have a record which is stored on a layer called MapInterpolation – because that reflects how the data was captured. But because it is part of the RMP (Risk Management Plan) program, I want to move it to a layer called RiskManagement.

In fact, the different source Feature Types I have are:

- HouseAddress

- OtherAddress

- MapInterpolation

- PhotoInterpolation

…whereas the different destination Feature Types I want to get are:

- RiskManagement

- AssessmentCleanupRedevelopmentExchange

- ToxicReleaseInventory

- ResourceConversationRecoveryAct

There’s no simple mapping between the source and destination – it’s all dependent on the value of the attribute called Program. So I am going to use the SchemaMapper transformer to handle the switch between what I have and what I want to get.

Feature Type Mapping: Lookup Table

My SchemaMapper lookup table has to define both a clause for the Feature Type and the attribute values. So it will look like this:

SourceType,SourceProgramAttribute,SourceProgram,DestinationType HouseAddress,Program,RMP,RiskManagement HouseAddress,Program,ACRES,AssessmentCleanupRedevelopmentExchange HouseAddress,Program,TRIS,ToxicReleaseInventory HouseAddress,Program,RCRA,ResourceConversationRecoveryAct

In other words the first line after the header defines that:

WHERE Source Feature Type = HouseAddress

AND Program = RMP

THEN Destination Feature Type = RiskManagement

But then I also have to define the same for each different source Feature Type, for example:

MapInterpolation,Program,RMP,RiskManagement MapInterpolation,Program,ACRES,AssessmentCleanupRedevelopmentExchange MapInterpolation,Program,TRIS,ToxicReleaseInventory MapInterpolation,Program,RCRA,ResourceConversationRecoveryAct

Note that these fields don’t need to be in any particular order. The order here is just what looks easiest to me to understand when studying the data visually.

So, now that I have a lookup table defined, let’s look at the SchemaMapper parameters.

Feature Type Mapping: SchemaMapper Definition

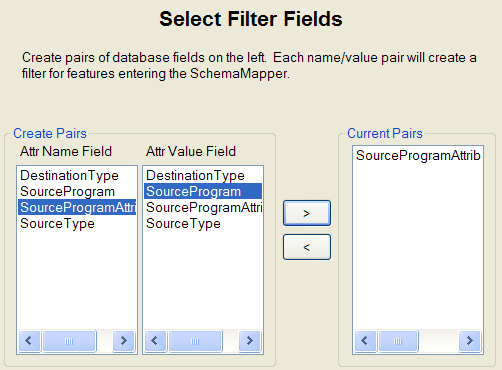

In the Filter Fields dialog (introduced in post #3) I want to set up the attribute part of the Where clause. This I do by selecting the lookup field defining the source attribute, and the lookup field defining the attribute value – then clicking the > button to make them into a true pair:

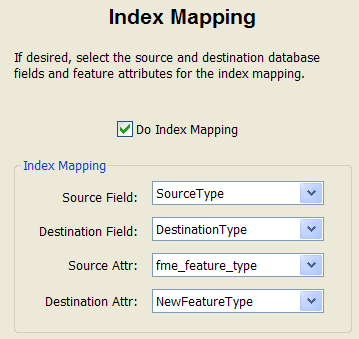

Now I need to define the Feature Type mapping to be carried out (wherever the above conditions are true). I’m using the same four parameters I used in post #2:

Remember, the logic of this is basically:

if Source Attr = Source Field, then set Destination Attr to Destination Field

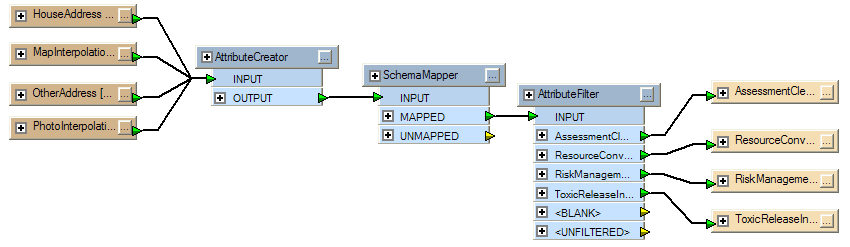

So that’s the SchemaMapper defined. When completed, the workspace looks something like this:

Remember (again in post #2) that up to the SchemaMapper part we’ve now only mapped the source data to a new attribute which defines the destination Feature Type; we still have to cause that Feature Type to be created and connected. So – as you can see – I did that with an AttributeFilter transformer.

If I click the Run button in Workbench then this setup will now properly map my data.

Feature Type Mapping: Semi-Dynamic Functionality

Wait! The SchemaMapper dragon has an idea!

He says that I should try and handle any data which belongs to a previously unknown program, or previously unknown capture type, automatically.

For example, if my source data changes and I find:

– A new Feature Type called GPSHighPrecision, and/or…

– A new program called NPDES (National Pollutant Discharge Elimination System)

…could I handle these by editing the lookup table but NOT the workspace?

You bet I could, dragon, and it won’t take much effort to set up either.

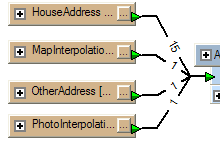

On the source Feature Type side, I’m currently set up with a single object per Feature Type:

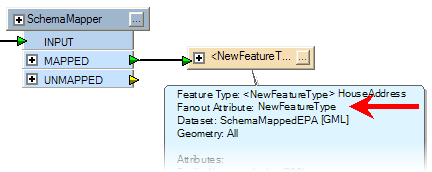

So first of all I’ll change this to be a single Feature Type object controlled by a Merge Filter:

On the destination side I originally used an AttributeFilter and set of destination Feature Types. Now I’ll replace all of that with a fanout.

Now, if I get a new source Feature Type (such as GPSHighPrecision) or a new Program Type (such as NPDES) then I don’t need to edit the workspace to accomodate them. All I would need to do is add them to my lookup table, for example I would add the following line for each existing Feature Type:

HouseAddress,Program,NPDES,NationalPollutantDischargeEliminationSystem

…plus add new lines for the new GPS Feature Type:

GPSHighPrecision,Program,RMP,RiskManagement GPSHighPrecision,Program,ACRES,AssessmentCleanupRedevelopmentExchange GPSHighPrecision,Program,TRIS,ToxicReleaseInventory GPSHighPrecision,Program,RCRA,ResourceConversationRecoveryAct GPSHighPrecision,Program,NPDES,NationalPollutantDischargeEliminationSystem

Of course, you might ask; “how would you handle these new items if you didn’t know they existed”? The answer is that I couldn’t go that far, but what I could do is add a Logger transformer (or similar) to the UNMAPPED output port on the SchemaMapper. This would alert me to these new items which I could then add to my lookup table.

And now I have a semi-dynamic workspace. I call it semi-dynamic because I can handle new data with the same attribute schema, but if the new Feature Type (source or destination) caused by the update required a different set of attributes – well then I would need to make workspace changes.

To handle that situation completely would need a fully dynamic workspace – and I’ll look at that another time.

Source Data

Note that the data used here was retrieved from Data.gov – and then hacked about a bit to create a good example. You can find the original dataset at http://www.data.gov/details/6

Benefits

To summarize, the main benefit now is that I can handle new data by editing the lookup table – and not the workspace.

But even without this I have the benefit that my schema mapping is handled by two (at most) transformers. The non-SchemaMapper method would involve a whole bunch of Tester and/or ValueMapper transformers to handle all of the individual conditions and lookups.

Again, the above is just a simple example (4 feature types, 4 attribute values) and wouldn’t take that much work to create statically (4*4 = 16 different permutations). But the full dataset from which this extract was derived has 34 different capture methods (Feature Types) and 42 different programs (attribute values). That’s 1,428 different permutations – and I defy anyone to define that many connections within a workspace!

OK. With these four posts we’ve covered all of the basics of the SchemaMapper transformer. However, I do hope to cover a few more topics in the near future, namely:

- Multiple clauses in the Filter Fields dialog

- Feature Type and Attribute Mappings inside the same lookup table

- Other capabilities of the SchemaMapper (eg the “Default Value” field in attribute mapping)

- How and why to incorporate the SchemaMapper inside a dynamic workspace.

Have fun!