Quality Maps in a Quantized World

Computers have been a big boon to the field of mapping, magnifying our ability to record, share, and analyse spatial data to a tremendous degree. One of the quirks that comes along for the ride is that everything in software is quantized: if you zoom into your map far enough, eventually it becomes impossible to represent two points closer together. You can think of this as overlaying your map on top of ultra-fine graph paper and forcing every point or vertex to lie on that grid. When planning how to store your spatial data, you’ll want to consider this issue up front: First determine the precision and geographic area you need to represent, and then select and configure software to meet these needs.

Normally, you can ignore this grid snapping effect. For example, if the spacing between those grid lines is smaller than the accuracy of your data – it’s often vastly smaller – you won’t care about the negligible distortions that will result.

However, some formats store coordinates with low precision (typically to improve performance or reduce storage costs), and correct configuration becomes essential to preserving data quality. The key idea is to trade off the area you can represent (e.g. a specific 10km by 10km square, or a large country) against precision (good to a meter or good to a micron). Poor choices mean that parts of your map can’t be represented or that precision is lost.

Typically, this configuration will be expressed as an offset and scale, but it can also appear as a matrix or similarity transformation. An excellent GTViewer blog post provides an in-depth explanation of how this works for their format; issues are similar for a handful of other GIS formats and spatial databases including MapInfo TAB, Informix, DB2, and some older variants of ESRI’s Geodatabase.

Let’s consider the consequences of poor configuration in more detail:

Choosing the wrong area. After the valid area is chosen, you can’t extend your map beyond that area. This means that auto-generating parameters from input is risky, as initial data might not cover the whole area of interest.

Choosing the wrong precision. This is more interesting, as the resulting loss of detail manifests in different ways:

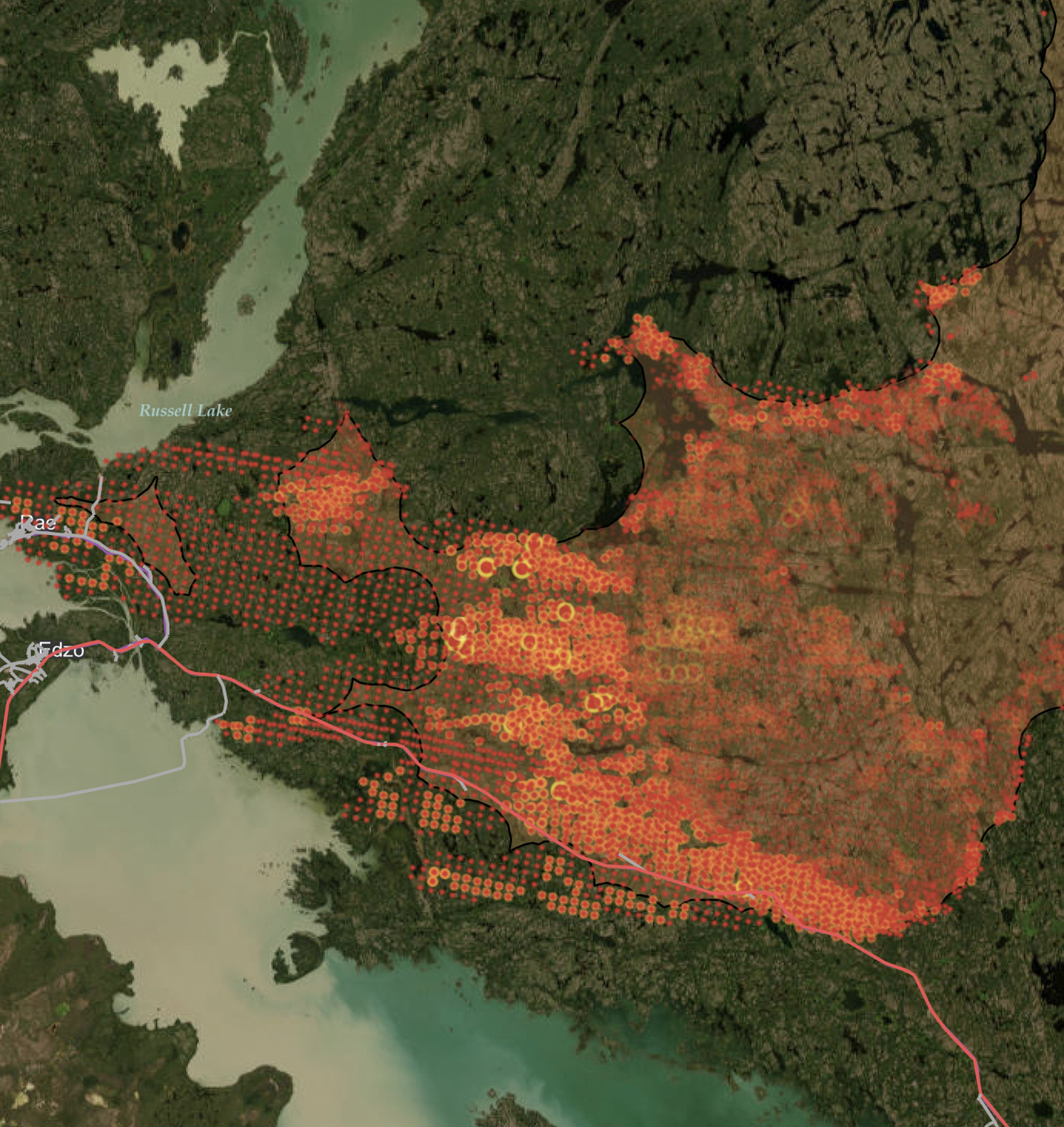

- Features can move. For example, if data can only be stored to ten-meter precision, points or vertices might move as much as five meters in each dimension. This can be unexpected as the typical hints to imprecision aren’t always present – if you see “75,000” you might think “perhaps rounded to the nearest thousand”, but if you see “75,123.456” you’d be less likely to draw that conclusion.

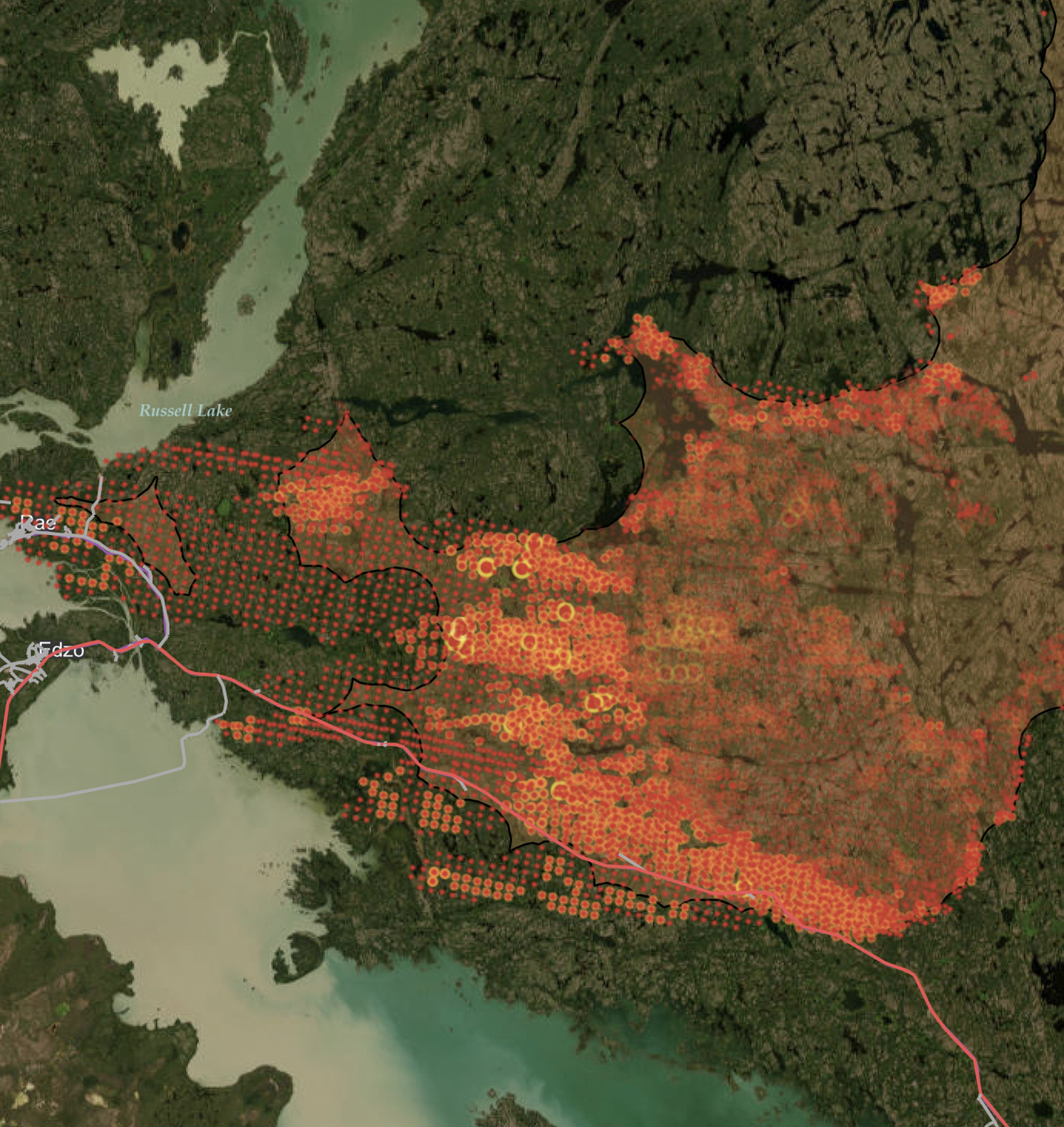

- Geometric properties can be lost. For example, a polygon that is not self-intersecting might become self-intersecting after being “snapped” to the underlying grid, and may then be considered invalid or unusable. In extreme cases, you get “dimensional collapse”, e.g. where an area can only be represented as a line or a point due to vertices snapping together.

However your GIS or spatial database stores data, it’s worthwhile considering these issues early. If you first determine your needs (precision, area of interest, processing capabilities, etc.) and then ensure your system can deliver, you’ll be one step closer to quality in our all-digital world.

Are these issues you’ve thought about when working with spatial data? Have you been affected by this quantization effect? I’d love to hear your stories.