The promise of Docker is that it can change how we deploy FME processing capabilities both on-premises and in the cloud.

For us, Docker is the ultimate abstraction layer in which to deploy fault-tolerant, scalable solutions. First Docker abstracted compute, then it abstracted networking, and soon it will abstract storage. As someone who did my graduate work in distributed and parallel processing, this is very exciting! Read more about Docker’s future plans around storage.

We want to share some of our early Docker experiments that have helped us learn and understand Docker Swarm and services. The nice thing is that you can try this on a single machine knowing it works the same across any number of machines that form a swarm.

If you want to perform the demo yourself you can find all resources at the end of this post.

This is part 3 in a series on FME and Docker.

Simple Docker Swarm Demo with FME

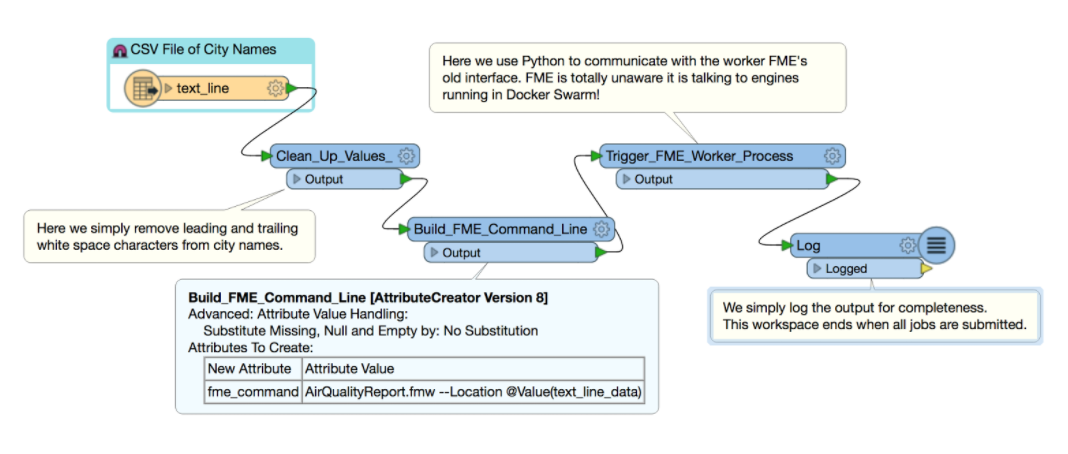

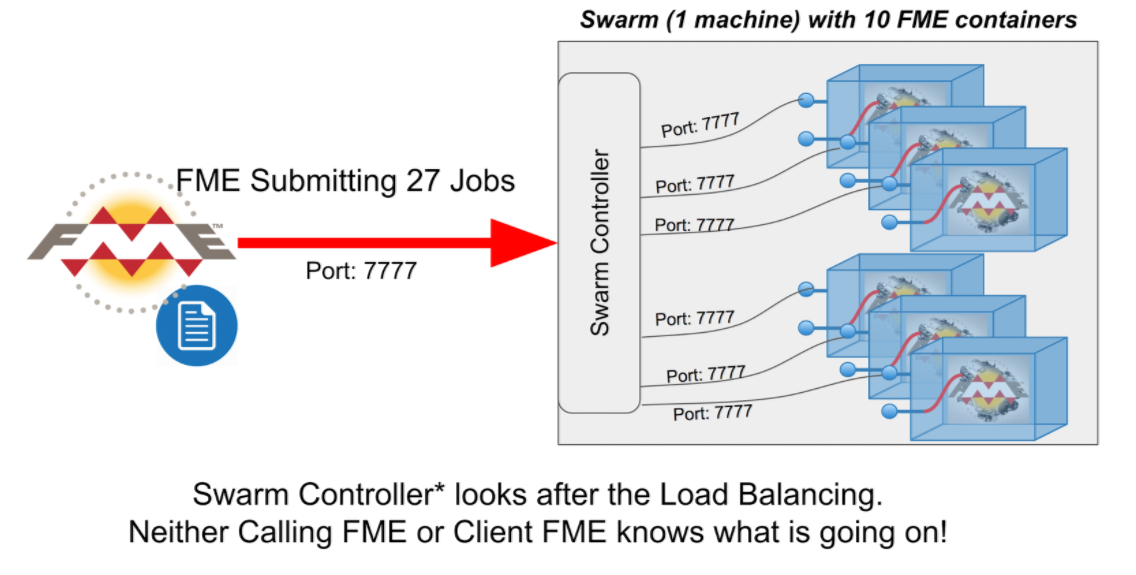

The demo is very simple and consists of two workflows (FME Workspaces): a driver workflow and a worker workflow (10 engines). This is shown below.

Watch the video demonstration of Docker Swarm and FME.

Driver Workflow

This FME Workspace simply reads a CSV file of city names. For each city name, it submits the task to a Docker swarm of “workers”. The workers generate a web page showing the air quality for the city. The driver doesn’t wait for the task to finish but simply submits all requests to the workers. The jobs are all submitted via a single port on TCP/IP. To the driver, it is merely submitting the requests to the “Docker Swarm” compute fabric. The driver is not concerned with or even knows the computation capacity of the swarm. In fact, the driver doesn’t even know it is talking to a swarm. As far as the driver is concerned it is just submitting a job to a single standalone FME worker! For this demo we are running the driver outside of Docker. It thinks it is just communicating with a single FME Engine! How cool is that!!

Where is the Docker Magic?

The magic here is that as far as the driver is concerned it is just submitting jobs to a single worker FME process. It connects and uses a single TCP/IP port in which it sends all the requests.

The driver is completely oblivious to how many workers make up the swarm or that it is even talking to a swarm at all. Docker Swarm magically looks after the networking details of sharing the connection with the workers, regardless of if the workers are on a single machine or spread across multiple machines. Docker Swarm just hides and deals with all the ugly details.

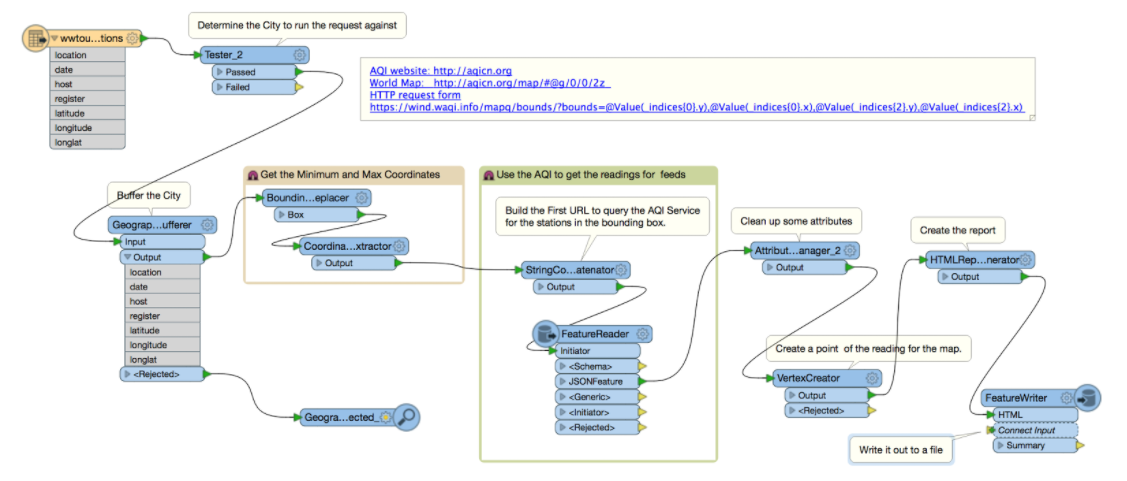

Worker Workflow

The worker workflow is an FME Workspace similar to the one we used during the FME World Tour 2016. Each worker FME Engine is started using a long-ago added mode (as part of early FME Server experimentation) where it creates a TCP/IP channel and then waits for a driver application to connect and send it work. In this example we create up to 10 engines.

Worker Script

Here is the script (start_containers_noweb.sh) that creates the service that runs an FME Engine waiting for work on port 7777.

#!/bin/bash

SCRIPTPATH="$( cd "$( dirname "${BASH_SOURCE[0]}" )" && pwd )"

docker swarm init

docker service create --name fme_engine -p 7777:7777 --mount

type=bind,target=/fmeengine/workspaces,source=$SCRIPTPATH/workspaces --mount

type=bind,target=/fmeengine/results,source=$SCRIPTPATH/results --mount

type=bind,target=/fmeengine/logs,source=$SCRIPTPATH/logs

safesoftware/fme-engine-2017

docker run -it -d -p 5000:8080 -v /var/run/docker.sock:/var/run/docker.sock

manomarks/visualizer

In the script you see 3 Docker commands:

docker swarm init

This command initializes a swarm and puts the current node into the swarm.

docker service create

This command creates a single service for the fme_engine. It specifies that the engine port of 7777 is mapped to the same port on the host. The engine is also told where it can find its workspaces to run, the directory to put its logfiles, and where the results are to be placed.

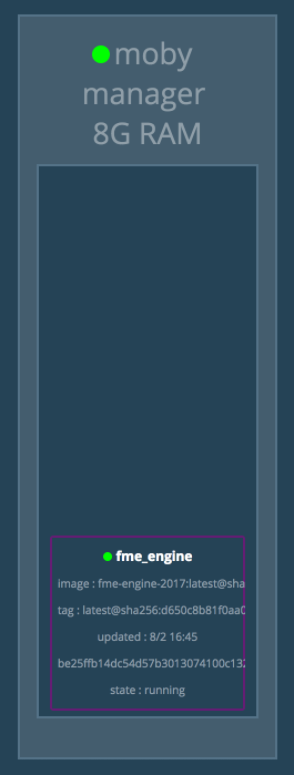

docker run

The final command simply runs our favorite Docker service visualization tool built by Mano Marks. Always nice to visualize all the containers running.

FME Worker Workspace

Where is the Docker Magic?

The magic here is that as far as each worker is concerned, it is the only one. They all specify the same port number within the Docker container and Docker Swarm looks after all the details of multiplexing the requests between the workers and the driver FME.

Launching the 10 Workers

Step 1: Run the start_containers script.

FMEStandaloneEngine dcm$ ./start_containers_noweb.sh

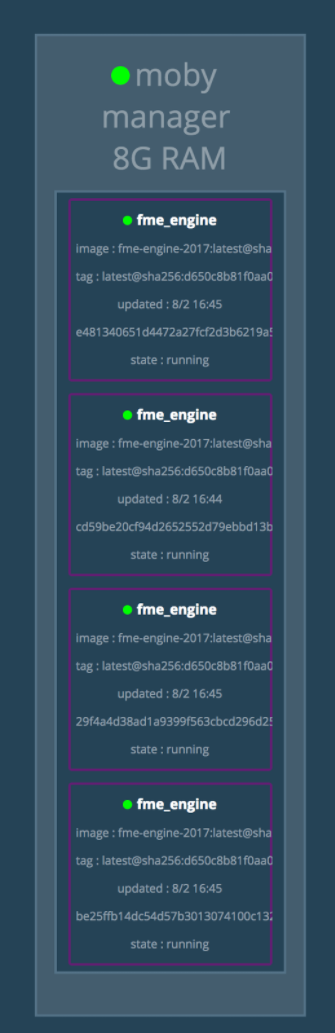

Step 2: Check out the Visualization of the single running FME Engine Container (localhost:5000)

Step 3: Spin up a total of 10 engines

Now tell the swarm we want to have 10 FME Engines running.

FMEStandaloneEngine dcm$ docker service scale fme_engine=10

It is as simple as that. We now have 10 FME Engines running.

Step 4: Verify they are running by again (localhost:5000)

Running the Driver Workspace

Now that the swarm is launched with all its FME Engines we are now ready to run the driver workspace.

To do this, simply run the driver workspace above in FME Workbench. This is run outside of the Docker Swarm. If you don’t have FME then you can Try FME for free.

Verifying the Results

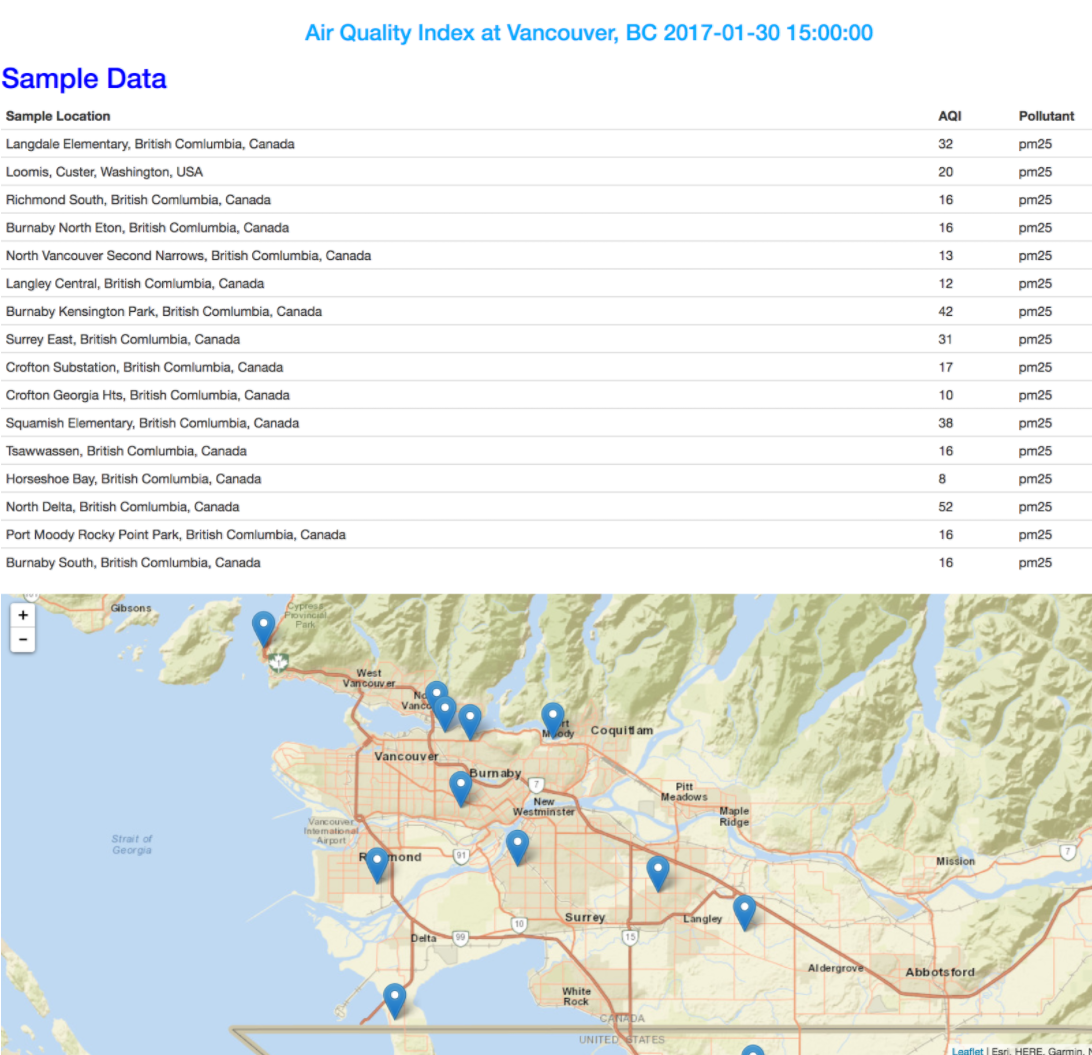

To see the Air Quality reports being generated by the FME Workspace, simply go into the “results” directory and you will find web pages of the Air Quality requested, one web page for each requested City. Example:

Why This Just a Toy Demonstration

The demo shows how Docker Swarm abstracts the network so a single TCP/IP port can be shared across different containers and servers that form a swarm. The demo works the same whether all containers are on a single machine or spread across many machines.

This isn’t production quality as it is easy to overrun the TCP/IP channels in a heavily loaded system. There is no queuing going on at all. Swarm is simply sending the worker messages to the different worker containers waiting on the read side of the socket.

Try It Yourself – It’s Fun!

If you want to try it yourself you will find everything you need here. Thanks for putting all this together, Grant!

- Docker Hub Link – https://hub.docker.com/r/safesoftware/fme-engine-2017/

- GitHub Repository – https://github.com/safesoftware/fme-standalone-engine

Watch the video as well.

Next Step

In the next blog we will take what we learned here to build a truly elastic scalable prototype of FME Server. We will leverage Docker, cloud technology, and FME Server.

Almost too much fun.

Don Murray

Don is the co-founder and President of Safe Software. Safe Software was founded originally doing work for the BC Government on a project sharing spatial data with the forestry industry. During that project Don and other co-founder, Dale Lutz, realized the need for a data integration platform like FME. When Don’s not raving about how much he loves XML, you can find Don working with the team at Safe to take the FME product to the next level. You will also find him on the road talking with customers and partners to learn more about what new FME features they’d like to see.

Grant Arnold

Grant is a DevOps Technical Lead for FME Server and works on all types of deployments for FME Server. In his spare time, Grant likes to play games and plays guitar.