This blog has already looked at augmented reality, the fmear format, and the FME AR app (a brief introduction, and a Coders on Couches episode), so why another article?

This blog has already looked at augmented reality, the fmear format, and the FME AR app (a brief introduction, and a Coders on Couches episode), so why another article?

Well, I wanted to go into how to make models for FME AR, and since the app is now available for Android as well as iOS, this seemed a great time to do that.

I won’t dive too deeply into the making of 3D models, because that’s not specific to FME AR. But what I will do is show a few different data types translated to fmear format, and give you some suggestions on how you might use the app in different ways.

A Brief Introduction to Augmented Reality and FMEAR

FME AR (FME Augmented Reality) is an app available for your mobile devices. Augmented Reality is essentially adding information to your real-world view (as opposed to Virtual Reality which is creating a totally artificial environment). FME’s AR app is powered by a data format called fmear.

Don’t worry, we haven’t just gone and made a new spatial format. Fmear is mostly OBJ format data wrapped up as a single compressed file, with some added information such as coordinate system and other metadata. So if you write data to OBJ and it looks OK, then it’s fine to write to fmear and view in the FME AR app. Or if you have an fmear dataset, you can treat it as a zip file, and extract the contents to get the data as OBJ.

The concept is simple, and – with FME – so is creating data for it. In fact, you probably already have data you can convert to fmear in just a single step…

A Basic FMEAR Translation

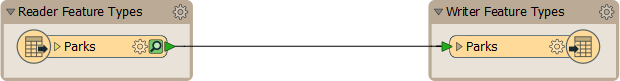

Let’s look at a real simple translation. OBJ allows me to write 2D features, so the simplest translation I can do is 2D polygons (of parks in Vancouver) to fmear:

With that workspace I can project this, very basic, output onto a surface such as my office floor:

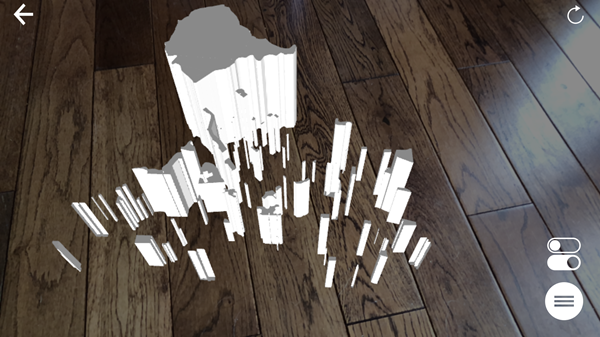

Or I can throw an Extruder transformer into the workspace, extrude by the number of park visitors attribute, and get this:

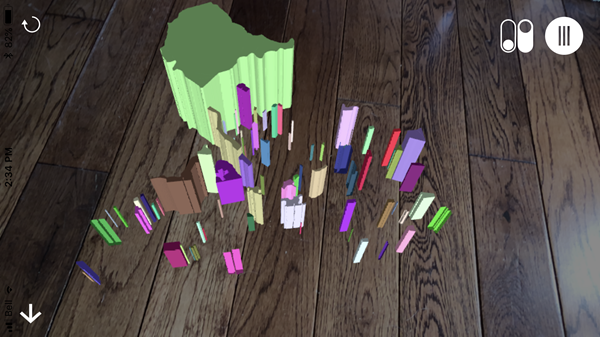

If I want color, well it’s not as simple as adding a FeatureColorSetter transformer; 3D objects need color applied as an “appearance”. When I do it looks like this:

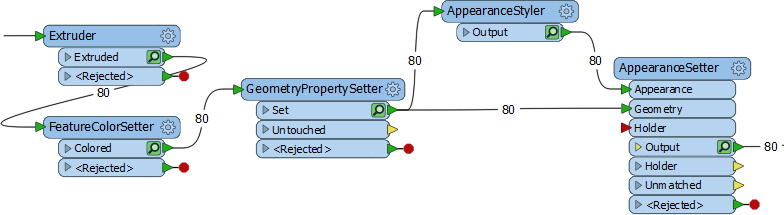

Incidentally, when I want to use colors and textures, I first have to generate a set of appearances and then attach them to the objects. I used a workspace like this:

Again, I don’t want to get too much into this, because it’s general 3D FME functionality, not specific to FME AR. Anyway, I create a random color with the FeatureColorSetter, but then use fme_fill_color as the color to use in my AppearanceStyler transformer.

The AppearanceStyler creates a “style” feature called an appearance. The AppearanceSetter uses a join to match features (a trait key) to appearances (an attribute key). I used the ParkId attribute as the basis for my join. It already exists as an attribute and the GeometryPropertySetter turns it into a trait as well so I can match it back up with the AppearanceSetter.

If I didn’t have an attribute, I’d use a Counter transformer first, or rather I’d use an option in the GeometryPropertySetter that can create a count attribute for me.

So, that’s how I created the data, and you can find that workspace on the FME Hub. Now a quick look at how the app works…

Using the FME AR App

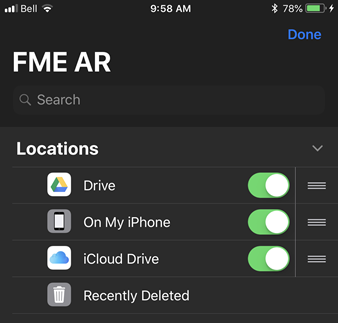

I won’t go into too much detail here either, because it’s a very straightforward app. You open a file and point the device at a flat surface. The model renders on that surface. But there are some interesting things to mention. Firstly the app (I’m using an iPhone) lets me directly access fmear files stored in iCloud, or on Google Drive:

I don’t have Dropbox or OneDrive installed on my phone, else they would be available too. For this article I used iCloud; I just set FME to write to the iCloud folder on my computer, and the model automatically appears in the FME AR app.

Once I have a model open in the app, I can move it around, resize it, and rotate it, using the usual touchscreen gestures; so I don’t really need to show that. Also, of course, the movement of the device is tracked so if you move around you’ll see different views of your model. That’s the augmented reality part!

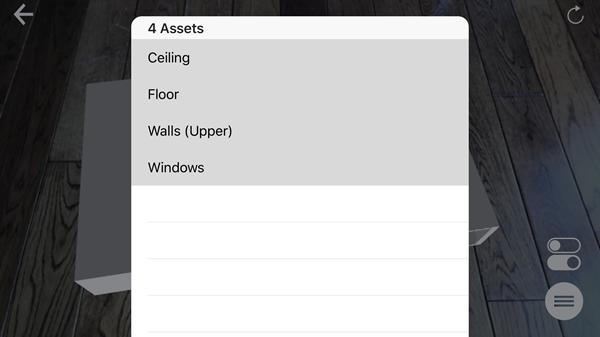

Also, know that the app allows control over levels of data:

So if my fmear file has multiple layers (created using multiple feature types in my FME writer), I can turn them on and off within the FME AR app, potentially revealing data underneath.

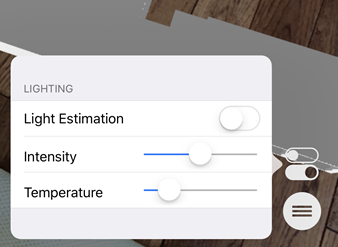

There are also some lighting settings available in the app:

When Light Estimation is turned off, then you have manual controls for Temperature and Intensity of the lighting. Temperature is pretty much a cool (blue) to warm (red) scale, and intensity is the amount of lighting (dark to light).

When Light Estimation is turned on, then (if I understand correctly) FME assesses the actual light coming into the room and adjusts the model lighting as appropriate.

FME AR Tips

I can’t think of too many tips, but I do have a few suggestions:

- Whatever you project your data on to (countertop, table, floor) try to ensure it has some texture. If you project onto an object that is too flat or plain-colored, then the app has trouble detecting where to place the model. It also helps to slightly tilt your device, and not point it directly perpendicular to the projection surface.

- Sometimes too much or too little light affects the ability of the app to identify a surface. Turning off Light Estimation sometimes resolves that.

- The app tracks the movement of your device in order to place the model and calculate your movements. For this reason, you can’t use the app in a moving vehicle! For example, don’t expect to project it on to a tabletop on a moving train!

So that’s the app. Now let’s look at a few other data types and methods to get them into fmear…

3D Building Models in FMEAR

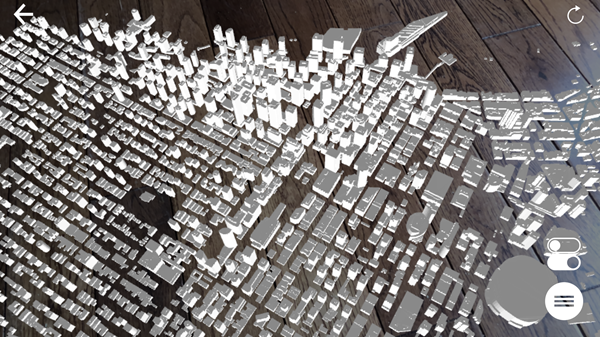

The most likely use for FME AR is to visualize building models in 3D, so I created a workspace to do that. Well, actually I created a couple of examples. If I just want to turn building footprints into blocks, I can use the Extruder transformer, like I did above. Here, for example (workspace on FME Hub), are the building footprints of Vancouver extruded by a height attribute:

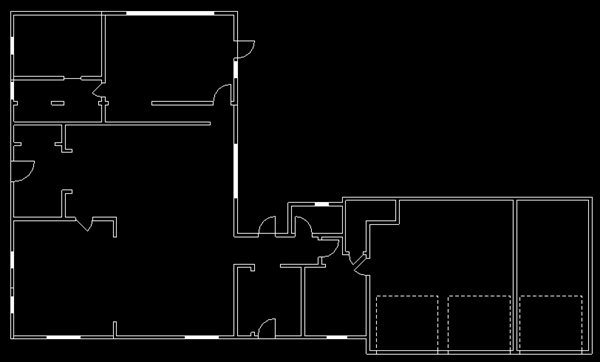

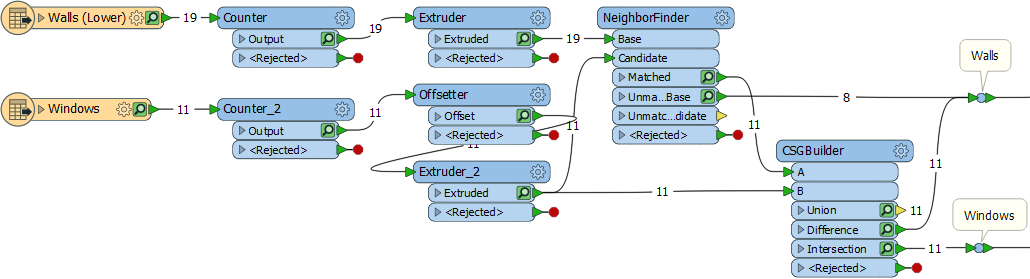

But I can also translate more complex building models. Here my source data is a CAD dataset with the outlines of building walls and windows:

You might already have 3D data, but I wanted to quickly show how to turn 2D data into 3D objects. Here’s my workspace (FME Hub):

It’s not too complex. The Extruder transformers are the ones that turn my wall outlines into 3D solids, with the Offsetter moving up the window features into the middle of the wall. I just used a fixed number for wall/window heights, but you may have actual values you can use (a NeighborFinder can read the closest label to a feature). The rest of the workspace is about punching holes for windows. Each window is given an ID number and matched to its wall using the NeighborFinder. The CSGBuilder punches the holes using the WindowID attribute as a group by.

One difficulty is that the CSGBuilder only works in pairs of features; the walls in my source data needed to be split into separate features, so that each only received one window. A second window in the same wall would just be dropped.

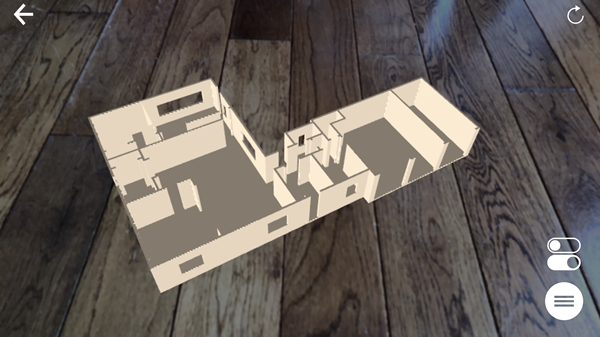

Visualizing 3D Buildings with FME AR

I finally write the data to different layers using an fmear writer. I also have data for the floor and ceiling and write them each to a different layer. By using multiple layers, I can turn off the ceiling and window objects in my view and see this:

And, of course, I could also add additional floors and the roof as separate layers so I can turn them on and off as required in my view. I might also want to see the building set against a particular backdrop…

Adding an FMEAR Backdrop

There are a few ways you and I might think to add a backdrop to the model, the first of which is to fit the model directly onto a map in real life. i.e., instead of using the wooden floor, can I project the data on to a paper map?

The answer, I think, is yes, to the extent that you can move and resize the model in your display. I think that’s what this user is demonstrating. However, there’s no way to snap the model on to known points on a map (I guess that’s an idea for future functionality).

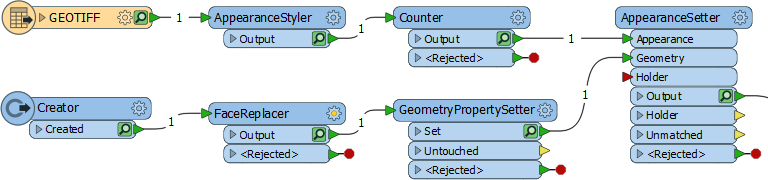

So, for the moment, the most accurate solution is to add any backdrop to the data model itself. You can’t write a raster feature directly to fmear format, but it can be added as an appearance on a flat 2D feature, like so:

In this test workspace (FME Hub), the Creator transformer creates a polygon, and the appearance is attached to it. You need to apply a FaceReplacer transformer to the polygon and then add a raster feature as the appearance. The only concern is which face is uppermost. Even if the AppearanceSetter is set to both sides, I believe OBJ only supports one side. Get the wrong side uppermost and you won’t see anything in FME AR. However, the Orienter transformer can be used to correct that, if it becomes an issue for you. Thanks to my colleague Dmitri for pointing that one out.

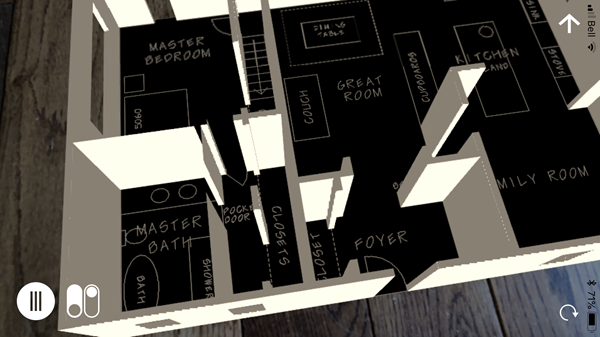

So, with that knowledge, I rasterized a CAD dataset and added that as a backdrop to replace the floor:

Or here with two backdrops, one CAD and the other a grass texture:

So that’s how we can add textures and rasters as a backdrop on a 3D model, but there are other occasions we can use this too…

DEMs and Land Surface Models in FMEAR

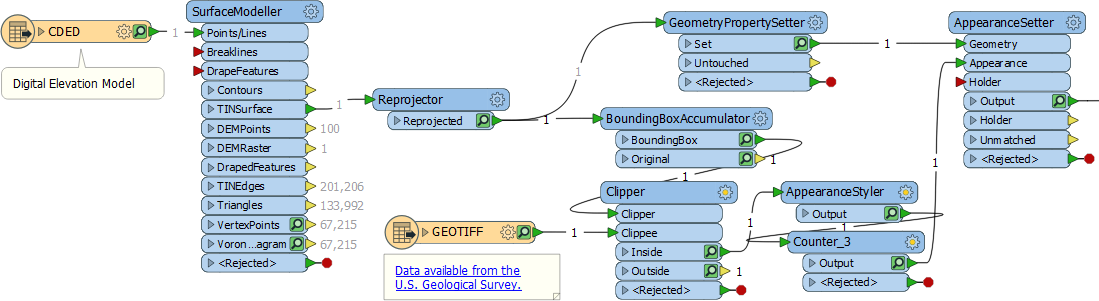

Another 3D object that FME can handle is a DEM (Digital Elevation Model). If I start with a raster DEM I can easily create a 3D surface from it with the SurfaceModeller transformer:

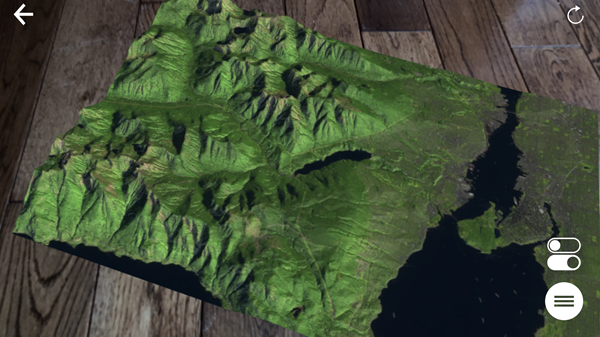

This workspace (FME Hub) creates a model and applies an appearance. The appearance is a USGS raster image, clipped to the area of the DEM. The result is this:

I can even add some other vector features; for example a route, road, or (like here) rivers and lakes:

And, of course, I could add my 3D buildings to the same model, to visualize their location and impact on the environment. And there are yet more potential uses…

Other Suggested FMEAR Uses

Other Suggested FMEAR Uses

I think the app is versatile enough that there are many other uses for it. If you attended the recent FME 2018 World Tour, then you may also have noticed us presenting AR data to the entire room. It’s easy to push device output to a projector. So even with the most basic AR model, you can use the FME AR app to present to an audience, in a way that is very, very unique.

Got non-spatial data? Why not try converting it to a 3D bar graph. It should only need a square polygon per bar, extruded to the value of an attribute. Or maybe take a game (like Dmitri’s “Shallow Blue” game) and create its board in AR.

What about other data types? Point clouds don’t sound like the most natural fit, and it’s true coercing each point to 3D spheres is way too time-consuming for little result. But what I did find is that the PointCloudSurfaceBuilder transformer used in a workspace (FME Hub) converts point clouds to a surface that you can easily write as an AR model. I do recommend using a PointCloudThinner transformer when experimenting with this; otherwise, processing time could be quite lengthy.

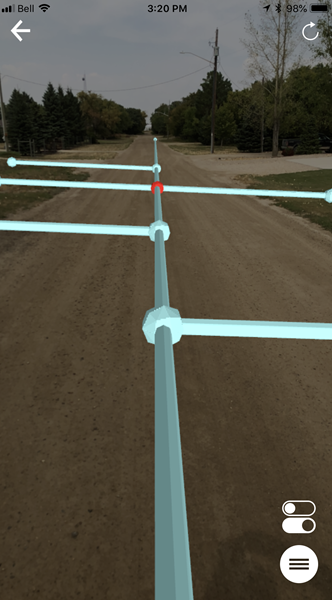

Line features are an interesting possibility since the Bufferer transformer got the ability to buffer in 3D. You could create a workspace (FME Hub) that reads tunnels or pipelines (above or below ground) and writes them to fmear. Since you can turn layers on and off in the FME AR app, you can visualize such features even if they are subsurface. For example, I might also add plumbing/pipes to my building model, so that I could see them when I turn off the floor layer. Or I might even visualize them in the real world:

That really fires me up. I hope that in the not-too-distant future, we’ll be able to live stream AR data to field crews, for them to inspect assets such as pipeline or electrical components. Maybe we could even do it using wearable technology like smart glasses. That would be fantastic. But these ideas bring up the question of how data fits in the real world which, along with some other functionality, is definitely on our radar…

Future Updates for FME and Augmented Reality

Future Updates for FME and Augmented Reality

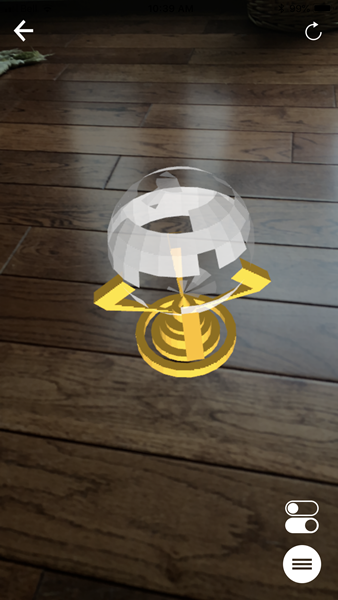

Primarily we designed this app to look at 3D data in a new way, on a platform that is convenient and easy for any FME user. It does that, but I’m sure you can see further possibilities. In fact, if I projected a 3D crystal ball onto my desk and looked closely, I think I would see a direct Revit to FME AR translation in the not too distant future (a mysterious voice whispers “2019” to me)!

And there are other improvements we are considering too…

AR Geolocation

FME exports models with coordinate system information, but that information isn’t yet used by the app.

In other words, for now, you’ll have to shuffle the data around on screen until it fits the real-world picture. FME AR won’t automatically lock data in its correct spatial position.

Geolocation of that type is possible, but… well we are looking for the motivation to implement that. If you really, really want that functionality – or have a project that would benefit greatly from georeferenced AR models – then let us know through the usual channels.

AR Feature Intelligence

Once we have geolocation, for me, the next logical step is to give features intelligence using attributes; i.e., you could tap on an object and information is revealed. I think that is still quite far off, not least because OBJ format doesn’t support attributes. But it’s certainly on my radar and Apple has a new format coming soon (USDZ) so maybe that will help. Speaking of which…

ARKit 2

FME AR is based on Apple’s ARKit package, and version 2 of that was recently announced. It has the intriguing idea of shared AR experiences, among other things, so in the future, we may support some of that functionality too. More news as I get it.

Do-It-Yourself Updates with Open-Sourced Code

Don’t forget that Safe has released the code for FME AR as open source; so if there are updates you really can’t wait for, you can always make them yourself. The iOS source code is already available on Github. The Android source code is just going through final vetting; check here on Github in the near future, or just search through our repositories.

Summary

And that’s about it for FME AR for today. Like I mentioned, a lot of the work is getting data into 3D, which is not necessarily unique to AR. If you need assistance creating 3D models, then there are plenty of resources on the FME Knowledgebase. You can also ask for help in the Q+A forum too, which is usually very quick to respond.

I mentioned Android is now supported, and the app on the Google Play store. To check if your device is compatible, here’s a link to the list of devices supported by the library we use.

And finally, I need to mention that FME 2019 beta has a reader for fmear format; so you can check your model in the 2019 Data Inspector before trying it in the FME AR app. Do be aware that at this point, FME 2019 is not just beta, it’s very beta! While it’s OK to check an AR model with, I wouldn’t try using it for production work of any sort.

I hope you found this article interesting, and if you do have any questions – or good demos of data in FME AR – please do let me know.

Mark Ireland

Mark, aka iMark, is the FME Evangelist (est. 2004) and has a passion for FME Training. He likes being able to help people understand and use technology in new and interesting ways. One of his other passions is football (aka. Soccer). He likes both technology and soccer so much that he wrote an article about the two together! Who would’ve thought? (Answer: iMark)